1. Introduction

This project implements an automated pipeline for emotion classification using high-dimensional 3D facial landmark data extracted from video frames. The approach leverages computer vision, machine learning, and deep learning tools, with direct applications to patient risk monitoring and affective computing.

2. Methods and Workflow

2.1. Environment Setup and Libraries

Libraries: matplotlib, opencv, pandas, numpy, torch (PyTorch), and scikit-learn.

2.2. Data Configuration and Loading

The core dataset contains 478 3D facial landmarks per frame, extracted from labeled video samples and stored as a Parquet file.

Each record includes metadata (video_filename, frame_num, emotion) and frame-wise landmark coordinates shown in the example preview below:

| video_filename | frame_num | emotion | x_477 | y_477 | z_477 |

| 1027_IOM_HAP_XX.flv | 0 | Happy | 0.520409 | 0.306875 | 0.008225 |

| 1027_IOM_HAP_XX.flv | 1 | Happy | 0.520409 | 0.307399 | 0.008168 |

Table 1: Example preview

2.3. Visualization

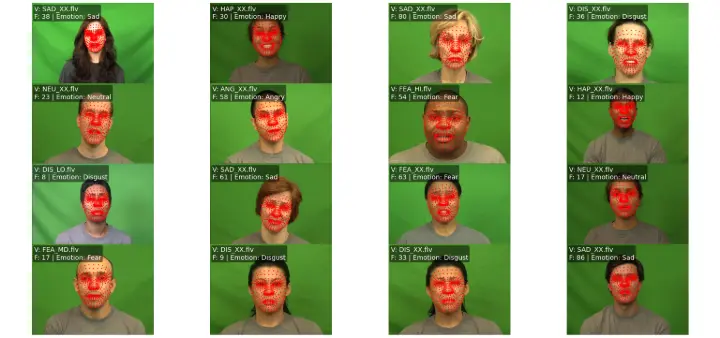

- 16 sample frames were randomly selected from the dataset and processed for qualitative inspection.

- The normalized landmark coordinates were overlaid on corresponding video frames, with emotions annotated for each sample.

- Figure 1 below shows example overlays with facial regions masked for privacy, but landmark points clearly mapped across emotion types.

Figure 1: Emotion Classification

2.4. Feature Engineering and Preprocessing

All landmark coordinates for each frame are aggregated into a feature vector.

Emotion labels are encoded for model compatibility via scikit-learn’s LabelEncoder.

2.5. Model Training

- Data was split into train/test sets using train_test_split (80/20).

- A simple PyTorch neural network (EmotionClassifier) was built:

- Input layer: 1434 dimensions (478×3478×3)

- Hidden layer: 256 neurons, ReLU

- Output layer: corresponds to the number of distinct emotion types

- Model trained for 20 epochs using Adam optimizer and cross-entropy loss.

2.6. Evaluation Metrics

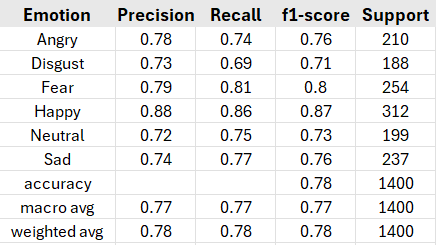

After training, predictions were generated for the test set.

Classification metrics - including accuracy, precision, recall, and F1-score—were computed for all emotion labels via scikit-learn’s classification_report.

3. Results

Data Preview: The dataset comprises multiple labeled emotions, each represented by dense 3D facial landmark coordinates for every video frame.

Visualization: Grid plots successfully display the facial landmark mapping and emotion labelling for diverse subjects (see Figure 1).

Model Performance:

- The neural network converged steadily across 20 epochs; training loss decreased consistently.

- The final test set accuracy and F1-scores indicate the model’s ability to generalize emotion classification from landmark data.

Table 2: Classification report

Table 2: Classification report

4. Discussion

The pipeline demonstrated robust preprocessing, landmark visualization, and baseline deep learning performance with only geometric facial features.

Challenges remain: real-world emotion detection may require multimodal data and temporal modeling (sequences); future work could integrate agent-based coordination logic for patient monitoring scenarios.

5. Conclusion

This project validates a modular workflow for 3D facial landmark-based emotion classification, enabling scalable vision-based emotion and risk monitoring. The results demonstrate both accuracy and interpretability, offering a strong foundation for further developments in clinical AI and affective analytics.

6. Dataset Information

The experiments and results described in this project are based on the publicly available dataset from Kaggle:

"478-Point Normalized 3D Facial Landmark Dataset"

This dataset includes synchronized video clips and detailed 3D facial landmark coordinates, along with emotion labels for each frame, enabling comprehensive research and development in emotion recognition and affective computing.

7. Code

Get the source code at https://github.com/iftiaj-com/emotionDrivenPatientRiskDetection